The goal was to drop users into the action, allow them to choose Captain America or Iron Man and then fight their way through 8 levels of Crossbones and his Henchmen.

Multi-lightmap walkthrough for Kellogg’s Marvel’s Civil War VR

The goal of Kellogg’s Marvel’s Civil War VR was to drop users into the action of the feature, allow them to choose Captain America or Iron Man and then fight their way through 8 levels of Crossbones and his Henchmen. The levels themselves were created from film references – with a generous helping of our own creative interpretation – and resulted in 3 main maps: Lagos, Berlin and the Leipzig Airport.

We set the experience in the early evening to help reduce eye strain but in large part it was to allow for much more dramatic lighting. But because our target was mobile VR we were severely limited in what we could do with real-time lights and shadows. To ensure the experience ran across as many devices as possible, we would need to bake the lighting into the scenes using light maps.

Traditionally, light maps meant static lighting. Our goal, however, was to build an experience that was much more dynamic. This lead us to the Multi-light maps technique: a way to get a lot more life into a VR environment without breaking the lighting budget.

Here’s a breakdown of the Multi-lightmap process:

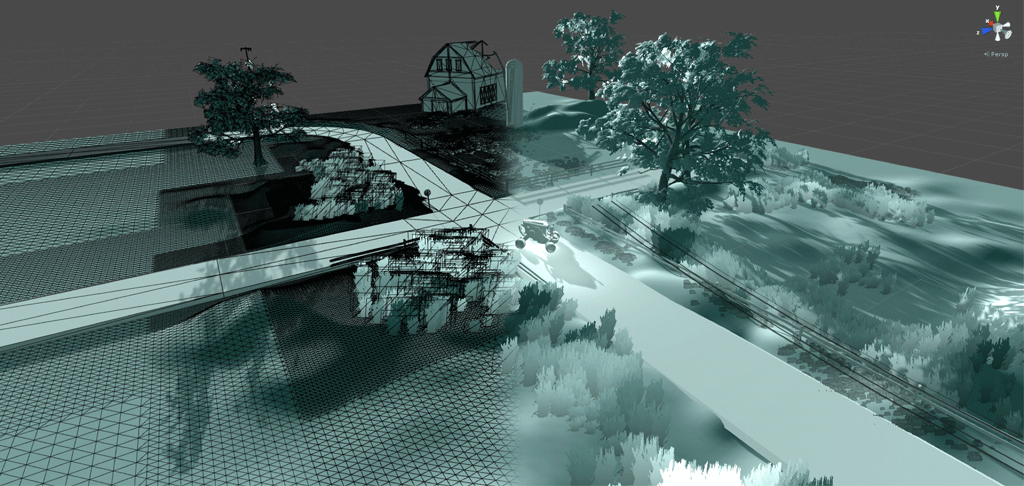

First, the environments were divided into sectors. Each sector was given one main light and a couple accent lights. Generally, the main light is something like sunlight or other primary light source that is consistent throughout all sectors. The accent lights are secondary light sources such as lamp posts or traffic signals and have their own color and intensity animated as needed. These lights should not affect any objects outside their sector or unexpected results may occur. Once we had our map subdivided into sectors we began lighting. This is where we took a different approach: Instead of baking the light colour and intensity, we used the light map to just bake light position and shadows. This was accomplished by using the RGB channels of the light map to store the lighting information for each light.

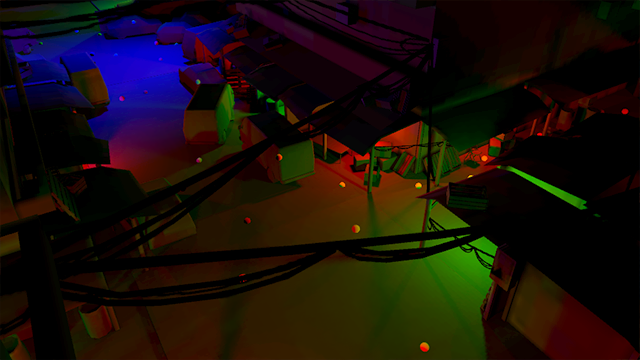

These 3 channels is the reason we are limited to one main and two accent lights per sector. Red could be used for the main light while blue and green are used for the two accents, for example. This gave us something that looked like this:

In this case, the red channel was used as consistent point lighting throughout the Lagos market rather than a directional light that might be used in a daylight setting. An ambient occlusion pass, saved as an alpha channel, was also included in the light map and, with a custom shader we assigned colour and intensity to each of the channels and ended up with something like this:

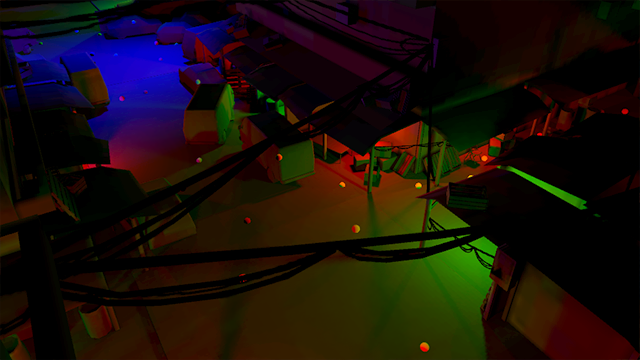

One of the benefits of controlling lighting intensity and colour through a shader is it becomes easy to make adjustments to lighting in real time. The image below is using the same light maps with different colours and intensities assigned in the materials.

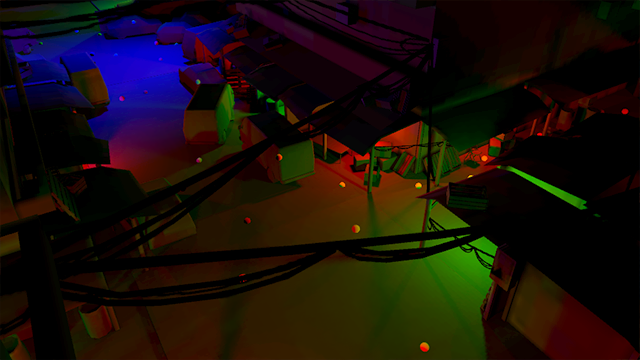

Since the ambient occlusion pass is also available through the shader more drastic lighting changes can be easily achieved such as changing the night scene to an overcast day:

Again, this is achieved by adjusting material settings using the same initial RBG light map. With all these lighting options tied to materials it can be difficult to sync all the color setting across materials in each sector – and even harder to animate them all. To solve this, we came up with a Light Manager script.

This script is added to a null object and pulls in all material attributes from objects within its volume which makes them editable in one place. An animation controller is then added allowing the lights to be animated using only one animation clip per sector. This, in turn, allows all objects affected by the same light to have the same settings even if a different material is being used – making it a very powerful tool.

The three light managers for the Lagos map can be seen in yellow below:

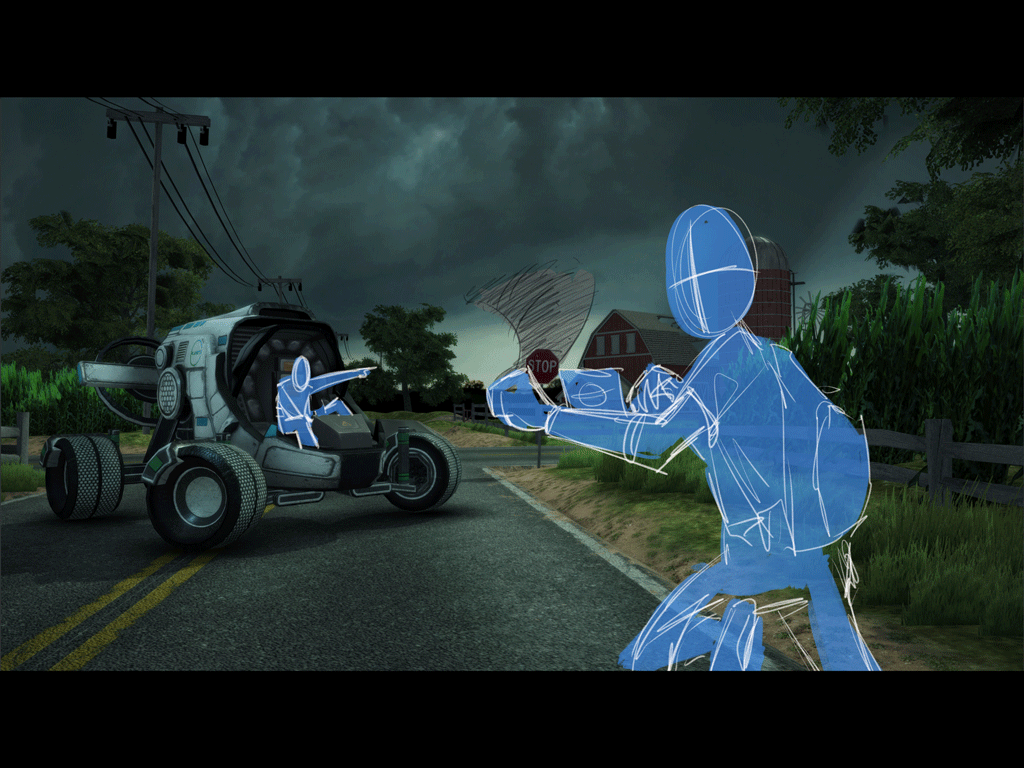

Once we had the environment looking the way we wanted, we realized we would have skinned characters – Crossbones’ henchmen – running through these maps. And if they were not affected by the flickering lights or by passing through the pools of light that made up the levels, it would break the immersion.

The Light Manager gave us access to all of the colours and intensities of the lights so it was a matter of coming up with a way to apply these to a mesh without a static light map. The key was utilizing light probes – though not exactly in the way they were intended. In the same way the light maps were re purposed, lighting probes were baked with the same RGB light data as the static light maps. But since the original lighting is baked outside of Unity, the lighting set-up had to be mirrored in Unity to bake the probes.

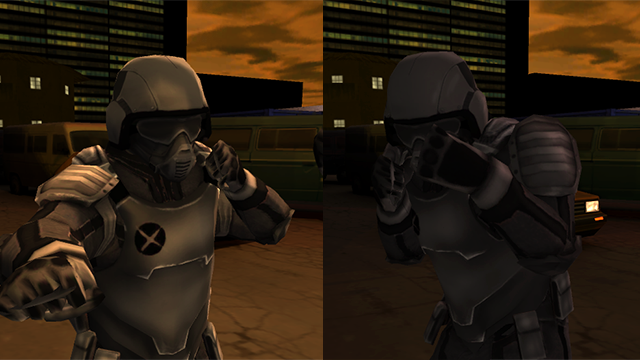

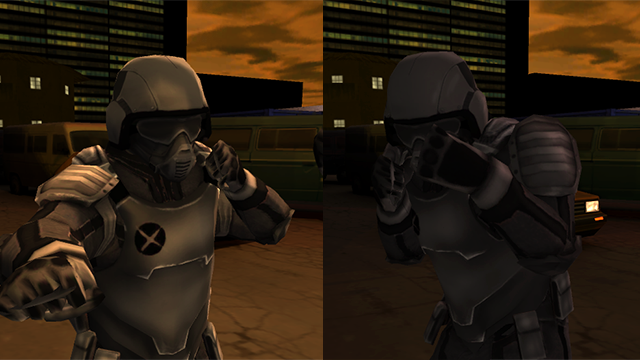

To this end, we wrote a shader that was similar to the one that was used for the light maps but instead used the RGB channels of the lighting probes. Since all of the material attributes were named the same in the Light Manager, we were able to apply the same colour and intensity to the skinned meshes as we did for the environments. This allowed the henchmen to blend into the scene seamlessly, picking up accent lights and, in the example below, flickering lights:

This technique was used to throughout out the entire project allowing us to create dynamic lighting effects and enhancing the immersive feel of the experience without using a single real time light.

Try it for iOS https://itunes.apple.com/us/app/kelloggs-marvels-civil-war-vr/id1093762466 or Android https://play.google.com/store/apps/details?id=com.kelloggs.civilwarvr